Deep Learning Training vs. Inference: What’s the Difference?

In today’s data-saturated world, it’s hard to downplay the importance of machine learning. This branch of artificial intelligence (AI) not only equips systems with the ability to process large datasets, but also allows them to analyze live data and make real-time decisions.

When working with a dispersed network of Internet of Things (IoT) endpoints, it’s especially important to integrate solutions that can handle large amounts of newly amassed data. But machine learning and deep learning projects pose optimization challenges, and they can be tough to coordinate among corporate teams. It’s important for technical professionals to understand how deep learning training and inference work, so they can design systems that help corporations reap the benefits of AI.

Here, we’ll explain how deep learning training and inference work, and discuss the relationship between both processes. Then, we’ll touch on the challenges of training and inference, and how to choose the best technology for your machine learning application.

The Machine Learning Life Cycle

Machine learning works in two main phases: training and inference. In the training phase, a developer feeds their model a curated dataset so that it can “learn” everything it needs to about the type of data it will analyze. Then, in the inference phase, the model can make predictions based on live data to produce actionable results.

What Is Machine Learning Inference?

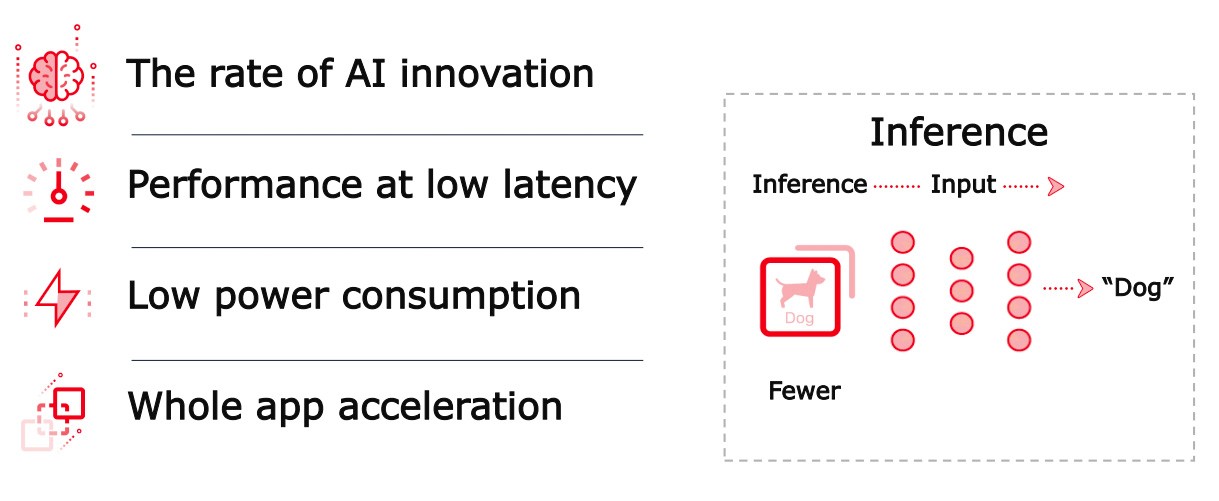

Machine learning inference is the ability of a system to make predictions from novel data. This can be helpful if you need to process large amounts of newly accumulated information from a widely dispersed IoT network, for example.

Here’s a simple analogy: Say you’re teaching a child to sort different fruits by color. You might first show them a tomato, an apple, and a cherry to learn that those fruits are red. Later, when you show them a strawberry for the first time, they’ll be able to infer that that fruit is red, too—since it’s similar in color to the apple, tomato, and cherry.

How Does Machine Learning Inference Work?

There are three key components needed for machine learning inference: a data source, a machine learning system to process the data, and a data destination.

Sometimes a data source may actually be multiple sources accumulating information from several places. Such is the case when information is captured from an array of IoT inputs. A machine learning model employs a series of mathematical algorithms to sort and analyze live data as it comes in. Those algorithms can make deductions based on features in data—qualities that differentiate one item from another. The model then “scores” these data points and gives an output based on how it was trained to interpret the data. Those output scores can help determine the next course of action.

For example, let’s say you’re collecting data from a customer service chatbot on an e-commerce site. Your data source would be the customer input from the online chat platform. Then, a machine learning model could determine that, if a customer writes that they are “dissatisfied” with a product, the customer may want a refund. A separate system could then help issue a refund for that customer.

What Is Deep Learning Training?

Deep learning training is when a deep neural network (DNN) “learns” how to analyze a predetermined set of data and make predictions about what it means. It involves a lot of trial and error until the network is able to accurately draw conclusions based on the desired outcome.

DNNs are often referred to as a type of artificial intelligence, since they mimic human intelligence through the use of artificial neurons. A DNN has the ability to sift through abstract data types, such as images and audio recordings.

How Does Deep Learning Training Work?

During deep learning training, a DNN sifts through preexisting data and draws conclusions based on what it “thinks” the data represents. Every time it comes to an incorrect conclusion, that result is fed back to the system so that it “learns” from its mistake.

This process makes connections between the artificial neurons stronger over time and increases the likelihood that the system will make accurate predictions in the future. As it’s presented with novel data, the DNN should be able to categorize and analyze new and possibly more complex information. Ultimately, it will continue to learn from its encounters and become more intuitive over time.

What’s the Difference Between Deep Learning Training and Inference?

Deep learning inference can’t happen without training. The difference between deep learning training vs. inference in machine learning is that the former is a building block for the latter.

Think about how humans learn to do a task—by studying how it’s done, either through reading a manual, watching how-to videos, or observing another person as they complete the task. We learn to mimic others in the same way that DNNs pick up on patterns and make inferences from existing data.

Deep Learning Training and Inference: An Example

Deep learning can be utilized in a wide variety of industries—from healthcare to aerospace engineering. But it’s especially helpful in situations where a machine needs to make multiple decisions in real time, such as with autonomous vehicles.

Say you’re training a driverless vehicle to obey traffic laws. The vehicle’s system would need to know the rules of traffic first. So a programmer would train it on datasets that help it learn to recognize traffic signs, stoplights, and pedestrians, among other things. It would memorize the rules of the road by looking at examples and learning to analyze what it should do when it observes certain signs or scenarios.

But when driving on the road, the vehicle would need to make inferences based on its surroundings. It sounds simple to train the vehicle to stop at a red stop sign, but what happens if a stop sign in the real world has a different font or is a slightly different shade of red than the ones in the data set?

This is where deep learning inference comes in—it helps machines bridge the gap between the data they were trained on and ambiguous information in the real world. The vehicle could infer that the sign it came across is still a stop sign, thanks to a DNN that can extrapolate from features it recognizes.

Challenges of Machine Learning Inference

Both machine learning training and inference are computation-intensive in their own ways. On the training side, feeding a DNN large amounts of data is intensive for GPU computing, and it may require more or higher efficiency units. And minimizing latency issues during the inference process can pose a challenge for getting the system to make decisions in real time.

Machine learning projects can also be tricky to navigate among company-wide teams. For example, if a machine learning project is coded in TensorFlow, but part of the team is more familiar with Pytorch, there could be issues adapting the system for testing and deployment. And updating the models can be costly and difficult—especially if they need to be trained on new data.

Also, AI hardware vendors provide different level of software support for frameworks and layers, making it non-trivial to evaluate different hardware options to achieve the Total Cost of Ownership (TCO) required by the project.

The Future of AI Relies on Efficient Machine Learning Inference

Finding the right hardware solution is a vital building block to creating an AI system that performs efficiently and effectively. By focusing on machine learning inference, AMD machine learning tools can help software developers deploy machine learning applications for real-time inference with support for many common machine learning frameworks, including TensorFlow, Pytorch and Caffe, as well as Python and RESTful APIs.

This focus on accelerated machine learning inference is important for developers and their clients, especially considering the fact that the global machine learning market size could reach $152.24 billion in 2028.

Trust the Right Technology for Your Machine Learning Application

AI Inference & Maching Learning Solutions

AMD is an industry leader in machine learning and AI solutions, offering an AI inference development platform and hardware acceleration solutions that offer high throughput and low latency.

Explore the AMD Vitis AI optimization platform, which offers adaptable, real-time machine learning solutions.